The Evolution of AI Framework

AI frameworks serve as the backbone of the intelligent economy, playing a pivotal role in AI development. They function as the operating systems of the AI technology ecosystem, bridging the gap between academic innovation and commercialization. AI frameworks have propelled AI from theory to practical applications, marking the advent of an era focused on scenario-based AI applications. These frameworks are considered vital infrastructure for the development of AI.

Table of Contents

How AI Framework works

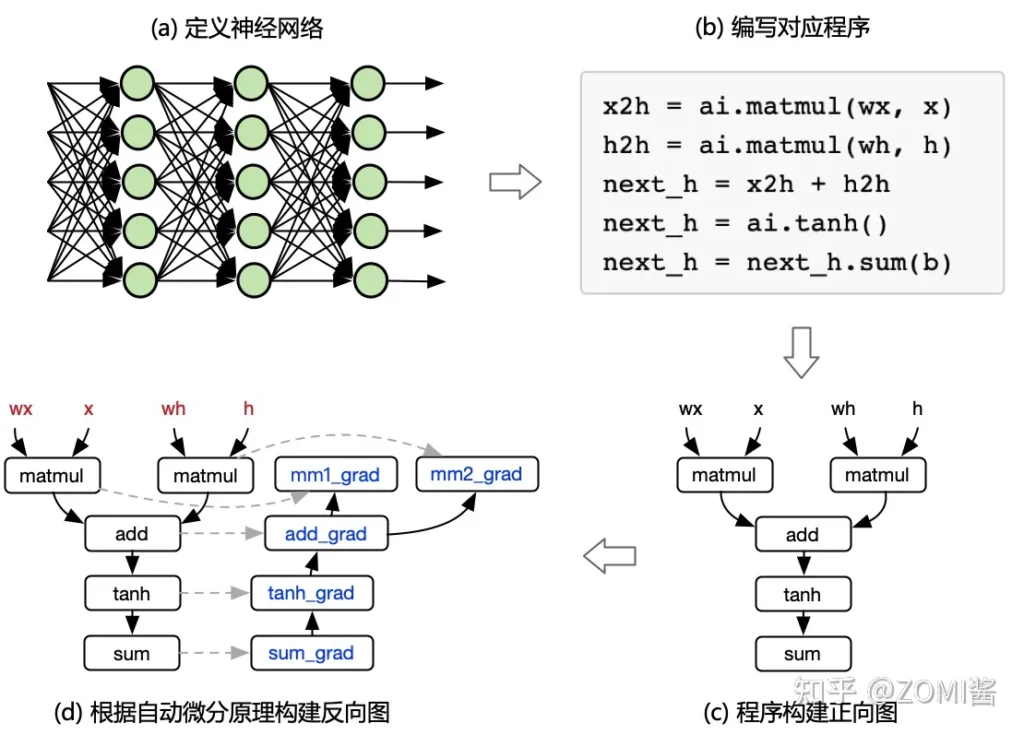

We know that the AI framework expresses and handles automatic differentiation mathematically, and the final representation says that developers and apps are well able to go about writing tools and libraries for neural networks in deep learning, and the overall process is shown below:

[1]

In addition to answering the most core mathematical representation principles, the AI framework actually has to think about and solve many questions, such as how does the AI framework implement multi-threaded arithmetic acceleration for actual neural networks? How to make the program execute on GPU/NPU? How to compile and optimize the code written by developers? Therefore, a commercial version of an AI framework needs to systematically sort out the specific problems encountered in each layer in order to provide relevant and better development features:

Front-end (user-facing): how to flexibly express a deep learning model?

Arithmetic (executing computations): how to ensure the execution performance and generalization of each arithmetic?

Differentiation (updating parameters): how to provide derivation operations automatically and efficiently?

Backend (system related): how to run the same operator on different acceleration devices?

Runtime: how to automatically optimize and schedule network models for computation?

The Deepening Phase of AI Frameworks

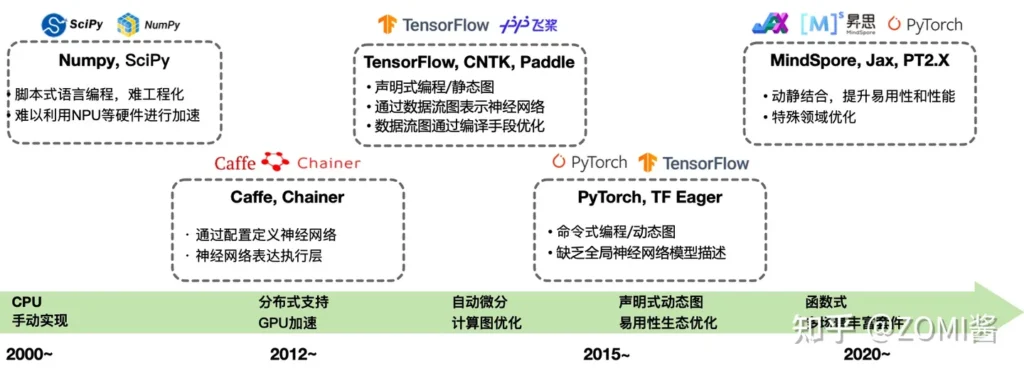

In the early 2000s, AI frameworks were in their infancy with limited computational power, and neural networks had little impact. Traditional machine learning tools were not optimized for neural networks. Early frameworks were unfriendly, and support for GPUs and NPUs was scarce. From 2012 to 2014, deep neural networks gained prominence with Caffe, Chainer, and Theano. The boom from 2015 to 2019 saw ResNet, TensorFlow, and PyTorch dominating. In the deepening phase from 2020 onwards, TensorFlow and PyTorch prevailed, Chainer shifted to PyTorch, and CNTK declined. Keras integrated with TensorFlow, reflecting the dynamic evolution of AI frameworks.

The Evolution of AI Frameworks

As AI continues to advance and expand into diverse application scenarios, with an increasing focus on cross-domain integration, new trends are emerging, presenting higher demands for AI frameworks. Notably, the rise of super-large-scale models such as GPT-3 and ChatGPT has placed even greater requirements on AI frameworks. These requirements encompass full-scenario multitasking support, heterogeneous computing support, and the need to maximize compilation optimization. In light of these demands, major AI frameworks are exploring the development of the next generation of AI frameworks, each with unique advancements:

Huawei’s Ascend MindSpore: This framework has made significant breakthroughs in full-scenario collaboration and trustworthiness.

MegEngine by Megvii: MegEngine focuses on deep integration of training and inference, addressing this as a single entity.

PyTorch 2.X: PyTorch, now backed by the Linux Foundation, introduces new architectures and versions, particularly emphasizing graph mode.

Future Trends in AI Frameworks

AI frameworks are expected to address a wide array of challenges and advancements, which can be summarized into several key trends:

Full-Scenario AI Frameworks: AI frameworks must support the deployment of network models across diverse platforms, including edge, cloud, and various devices. This trend has led to the proliferation of AI hardware and diverse software tools, resulting in the need for standardization and compatibility among AI frameworks. The absence of a unified intermediate representation layer presents challenges for model migration and increases deployment complexity.

Usability Enhancements: Future AI frameworks will focus on both frontend convenience and backend efficiency. This means providing comprehensive API systems and frontend language support conversion capabilities to make frontend development more user-friendly. AI frameworks need to offer high-quality dynamic-to-static graph conversion for efficient backend runtime. They must excel at enabling flexible and user-friendly development during model training, high-performance execution during deployment, and convenient conversion between dynamic and static graphs. PyTorch 2.0’s graph compilation mode is at the forefront of this trend.

Large-Scale Distributed AI Frameworks: The emergence of super-large-scale AI models, like GPT-3 with its 175 billion parameters, presents new challenges. These models require large data, significant computing power, and efficient memory usage. Memory needs include storing parameters, activations, gradients, and optimizer states. Training such large models calls for powerful computing resources and efficient communication in distributed training. Furthermore, ensuring correctness, performance, and availability in E-scale AI cluster training, as well as compression techniques for model deployment, are all part of the evolving landscape.

Scientific Computing Integration: AI frameworks will deepen their integration with traditional scientific computing. New languages and frameworks, like Taichi, combine AI technologies with scientific computing methods. This merging of AI with scientific computing will enable researchers to apply AI methods to established scientific problems. This convergence is evident in three main directions: replacing traditional computation models with AI neural networks, using AI to solve problems with traditional scientific computation models, and leveraging AI frameworks to accelerate equation solving without changing the underlying scientific model.

Principal AI Framework

The choice of a deep learning framework depends on various factors, including your specific needs, preferences, and the type of projects you’re working on. Each of the frameworks you mentioned—TensorFlow, PyTorch, and PaddlePaddle—has its strengths, and the “best” one for you may vary. Here’s an overview of each framework:

TensorFlow:

Popularity: TensorFlow is one of the most widely adopted deep learning frameworks with a large and active community.

Ecosystem: TensorFlow offers an extensive ecosystem that includes TensorFlow.js for web applications and TensorFlow Lite for mobile.

Production-Ready: TensorFlow is well-suited for production deployment and has tools like TensorFlow Serving for serving models.

TF2 and Keras: TensorFlow 2.x integrates the Keras high-level API, making it user-friendly and flexible.

PyTorch:

Dynamic Computation Graph: PyTorch’s dynamic computation graph makes it easier for debugging and experimentation. It’s often considered more Pythonic and intuitive.

Research-Oriented: PyTorch is popular in academic and research settings due to its flexibility and easy debugging.

Tight Integration with Python: It has a more Pythonic feel, making it easier for developers familiar with Python to get started.

PaddlePaddle:

PaddlePaddle is developed by Baidu, and it has gained significant popularity in China. It’s recognized for its support in the Chinese deep learning community.

User-Friendly for Beginners: It’s known for being user-friendly and provides many prebuilt models and tools for developers.

Large Scale and Industry Focus: PaddlePaddle is designed for large-scale and industry-focused applications.

Considerations for choosing the right framework

Use Case: Consider the specific applications you’re interested in. Some frameworks might be better suited for computer vision tasks, while others excel in natural language processing.

Community and Support: A strong community can be a valuable resource for learning and troubleshooting. TensorFlow and PyTorch have large, active communities, whereas PaddlePaddle has a strong presence in China.

Learning Curve: Your familiarity with Python and your experience in deep learning can impact your choice. If you’re new to deep learning, you might find PyTorch’s dynamic computation graph and Keras API more approachable. If you are a industry expert from China you already work with Paddle.

Deployment: Think about where you’ll deploy your models. TensorFlow has tools like TensorFlow Serving for production deployments.

Personal Preference: Sometimes, it comes down to personal preference. You may find one framework more comfortable to work with based on your preferences.

Conclusion

This title underscores the significance of demystifying AI and gaining a deep understanding of its underlying mechanisms. It highlights the critical role of choosing the right framework for your industry-specific projects, which is pivotal for the successful application of AI in real-world scenarios. As AI continues to play a transformative role across various sectors, this comprehension and framework selection process are essential for driving progress and achieving tangible outcomes..

About Juan D. Guerra

Data centers have become significant energy consumers, and addressing environmental concerns cannot be delayed. Stay informed, stay connected. Join our newsletter to receive regular updates, insightful articles, and exclusive content straight to your inbox. Next article is about Artificial Intelligence Network: Enhancing Communication Capabilities . Stay ahead of the curve and never miss out on the latest news and trends. Subscribe today and be part of our community!, This article is credited to ZOMI酱 en 知乎。